Introduction

Stanford University, often heralded as one of the premier institutions of higher learning in the world, has a rich and storied history that dates back to its founding in 1885 by Leland Stanford and his wife, Jane. Initially established as a memorial to their only child, Leland Stanford Jr., who passed away at a young age, the university has grown from its humble beginnings into a global leader in education, research, and innovation. Nestled in the heart of Silicon Valley, Stanford has played an instrumental role in the development of many industries, especially technology and entrepreneurship. Its alumni include countless influential figures in business, science, and public service, making it a central player in shaping the contemporary landscape of higher education and societal progress. This article delves deep into the various facets of Stanford’s history, exploring its founding, growth, contributions, and continuing legacy as a cornerstone of knowledge and innovation.

Founding of Stanford University: The Vision of Leland and Jane Stanford

The inception of Stanford University can be traced back to the transformative vision of Leland and Jane Stanford. Following the untimely death of their son, Leland Stanford Jr., in 1884, the Stanfords were deeply impacted by this personal tragedy. They resolved to create an institution that would honor his memory by providing educational opportunities to young people, enabling them to thrive in a rapidly changing world. This vision led to the establishment of the university in 1885, with its charter declaring a commitment to academic excellence that has endured over the years.

The Stanfords, both prominent figures in California’s socio-economic landscape, leveraged their considerable wealth from the California railroad boom to fund the university. They believed that a university should not only serve the elite but also offer its doors to students from varied backgrounds, a principle reflected in their initial admissions policy. This inclusive ideology was relatively progressive for the time and laid the groundwork for the institution’s diverse and vibrant student population.

The university’s first courses were offered in 1891, with a strong emphasis on the liberal arts and sciences. The inaugural class consisted of just 559 students, yet the spirit of innovation and exploration was palpable and set the tone for Stanford’s future. The campus, located in the heart of the San Francisco Peninsula, was architecturally inspired by the Collegiate Gothic style, with plans drawn from famed architect Charles A. Coolidge. The design aimed to foster a conducive learning environment, surrounded by nature’s beauty, which remains a defining feature of the campus to this day.

Throughout its early years, Stanford faced numerous trials, most notably financial difficulties that threatened its survival. However, the Stanfords’ commitment to their vision remained steadfast. They continually provided substantial funding and support, even as they faced varying periods of economic downturn. Eventually, their endowment laid a financial foundation that would propel the university into the future, allowing for expansion and innovation across various programs and facilities.

By the end of the 19th century, Stanford’s first graduates were already making an impact in various fields. The university quickly established itself as an institution of higher education that championed both practical skills and theoretical knowledge. Its motto, “The wind of freedom blows,” epitomized the university’s ethos, encouraging students to think critically and independently while challenging social norms. Stanford continued to evolve, with a focus on aligning its curriculum with the needs of an industrializing society.

Growth and Development: From a Small College to a Major Research Institution

In its early years, Stanford University focused primarily on education in the liberal arts, sciences, and engineering. However, as the 20th century unfolded, the university began a remarkable transformation into a major research institution recognized for its groundbreaking contributions. This shift was fueled by a combination of visionary leadership, a commitment to innovation, and the increasing centrality of research in higher education.

With the leadership of presidents such as David Starr Jordan and later Ray Lyman Wilbur, Stanford expanded its academic offerings and infrastructures, which included the establishment of specialized research centers that played pivotal roles in various scientific fields. The growth of the School of Engineering, in particular, marked a significant turning point, as the need for engineers surged during the industrial boom. Engineering programs were rapidly developed, leading Stanford to become a central figure in training professionals who would contribute to major infrastructure developments across the country.

In the mid-20th century, Stanford began to gain national attention for its robust contributions to scientific research, particularly during and after World War II. The university developed significant partnerships with government entities, including the U.S. military and various federal research projects, which brought substantial funding and resources to its research initiatives. This environment fostered an atmosphere of scientific inquiry and exploration that led to crucial advancements in fields such as medicine, technology, and environmental studies.

Simultaneously, Stanford expanded its physical footprint. The development of state-of-the-art facilities and laboratories not only attracted top-tier faculty and researchers but also provided students with opportunities for hands-on experiences. The university invested heavily in its libraries, laboratories, and research centers, which became hubs of innovation and collaboration. The faculty attracted to Stanford included many Nobel laureates and renowned scholars who would shape the field of research and academic discourse.

As the academic programs at Stanford continued to diversify, the university also began to emphasize interdisciplinary studies, recognizing that complex global challenges required holistic approaches drawing on multiple fields of study. This shift aligned with the broader trends in academia, where the lines between disciplines began to blur, fostering environments where tackling real-world problems became increasingly collaborative.

By the latter half of the 20th century, Stanford had established a global reputation for research excellence, particularly through its contributions to Silicon Valley, which became a hotbed of technological advancement. The proximity of the university to major tech companies and startups catalyzed a synergistic relationship, with professors and graduates playing pivotal roles in the founding and development of influential companies.

Stanford University’s growth and development reflect a commitment to academic excellence, innovation, and research that has permeated its culture. The institution has consistently embraced change while staying true to its foundational principles, positioning itself as a leader in education and research for decades to come.

Notable Contributions: Stanford’s Impact on Science and Technology

Stanford University has consistently been at the forefront of scientific and technological advancements throughout its history. From its early days, the institution has fostered an environment ripe for innovation, contributing significantly to various fields, including medicine, engineering, computer science, and environmental studies.

The university’s emphasis on research began to pay dividends as early as the 20th century, when faculty and students collaborated on pioneering projects that would change the course of history. One of the most notable contributions came in the field of medicine, where Stanford has been a leader in medical research and education. The establishment of the Stanford School of Medicine in 1908 led to groundbreaking discoveries in areas such as cancer research, genetics, and biomedical engineering. The university’s research hospitals and partnerships with healthcare organizations have accelerated advancements in patient care, significantly impacting public health.

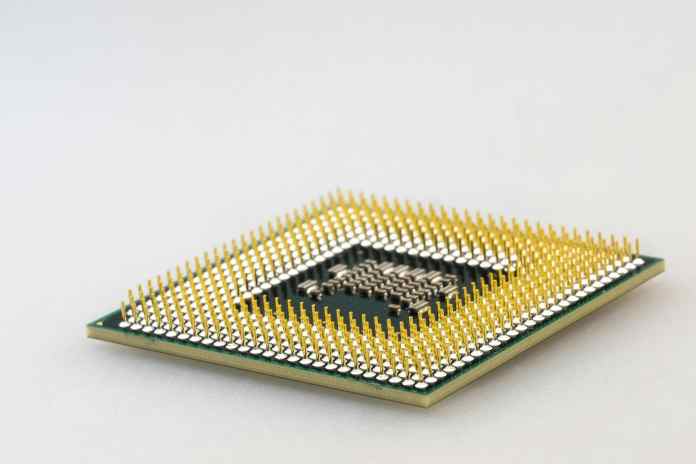

In the realm of engineering, Stanford made significant strides with its Department of Electrical Engineering, which became a cornerstone in the development of modern technology. The university’s role in the early evolution of the semiconductor industry cannot be overstated. Pioneering research by Stanford faculty contributed to the development of silicone technology, leading to the creation of integrated circuits, which serve as the backbone of contemporary electronic devices.

Moreover, the emergence of computer science as a discipline within Stanford laid the groundwork for significant contributions to the digital age. The Stanford Artificial Intelligence Laboratory, founded in 1963, played an instrumental role in the development of AI technology. Notable alumni and faculty members from Stanford have pioneered advancements in machine learning, robotics, and data science, shaping the trajectory of the tech industry.

The university’s innovative spirit also found its way into the burgeoning field of renewable energy. Stanford’s commitment to addressing climate change brought forth groundbreaking research into solar energy, sustainable practices, and environmental management. Faculty in their Earth System Science department have led initiatives aimed at understanding and combating global warming, solidifying Stanford as a leader in promoting sustainability.

The university’s contributions extend beyond academia; Stanford had a hand in the foundation of pivotal companies that transformed the tech landscape. Cisco Systems, Google, Hewlett-Packard, and many other successful enterprises have roots tracing back to collaborations among faculty and students at Stanford. This relationship between academia and industry exemplifies Stanford’s unique role in fostering innovation and promoting entrepreneurship, with their Office of Technology Licensing facilitating the transfer of research findings into practical applications.

Stanford’s commitment to innovation continues to flourish, as seen in its focus on interdisciplinary research and collaboration. The university has established numerous centers and institutes that facilitate cross-departmental partnerships, ensuring that varied perspectives converge to tackle the complexities of modern challenges.

In summary, Stanford University’s contributions to science and technology are extensive and deeply ingrained in its history. Its commitment to research excellence has not only advanced knowledge but has also transformed industries and enhanced the quality of life for people around the globe.

Cultural and Social Changes at Stanford: A Reflection of American History

The history of Stanford University is intertwined with the cultural and social shifts that have shaped the United States over the past century. As societal norms evolved, so too did the university’s policies, addressing issues ranging from civil rights to gender equality, ultimately reflecting the larger narrative of American history.

In the early years, Stanford was a predominantly male institution, mirroring the higher education trends of the time. However, as women began to advocate for their rights in the 20th century, Stanford made strides toward gender inclusivity. The admission of women into graduate programs marked a crucial turning point; by the 1970s, efforts to recruit a diverse student body had gained momentum, encouraging greater participation of women across various fields of study. Today, women comprise a significant portion of the student and faculty population, exemplifying the university’s commitment to inclusivity.

The civil rights movement of the 1960s served as a catalyst for social change at Stanford, prompting an examination of issues related to race and equity within the university. Students became increasingly vocal about the need for diversity, equality, and representation, leading to the establishment of ethnic studies programs. This move not only enriched the academic offerings but also fostered a more inclusive campus culture that resonated with broader societal movements.

Throughout the latter half of the 20th century, as the United States grappled with the implications of the Vietnam War and other social upheavals, Stanford students actively engaged in activism. The university campus became a site for protests and discussions advocating for change, reflecting the evolving political landscape. Administrators began to realize the importance of addressing student concerns openly and fostering a dialogue surrounding sociopolitical issues.

Moreover, Stanford has made substantial contributions to the LGBT rights movement, becoming one of the first universities to support LGBTQ+ students and create an environment that welcomes diversity. Initiatives promoting LGBTQ+ visibility, support services, and events have transformed the university into an inclusively progressive campus.

As Stanford navigated these societal changes, the institution also embraced technological advancements that influenced campus culture. For instance, the rise of the internet and digital technology revolutionized communication and learning. The establishment of student-run organizations focusing on technology, social justice, and environmental issues reflected a dynamic campus that encourages students to engage with contemporary global challenges.

Stanford’s response to the evolving societal landscape included a proactive approach toward fostering a diverse and inclusive community, launching programs focused on building cultural competence and understanding among students. Events that celebrate cultural heritage, identity, and activism have become part of Stanford’s identity, reinforcing its commitment to social responsibility.

In summary, the cultural and social changes at Stanford University offer a mirror to the broader progression of American society. The university has shown a willingness to adapt and evolve, responding to the needs and concerns of its students while prioritizing inclusivity and social justice. This commitment positions Stanford as not just an academic institution but as a dynamic entity that actively engages with and reflects the cultural currents of its time.

The Global Influence of Stanford University: Educating Leaders for Tomorrow

Stanford University has long been recognized as a leader in shaping the future of higher education and educating the leaders of tomorrow. Its global influence is profound, as the institution produces graduates who go on to impact various sectors around the world, from technology to politics, healthcare, and beyond.

With an emphasis on fostering innovation and critical thinking, Stanford’s curriculum equips students with the skills needed to navigate the complexities of the modern world. The university attracts a diverse student body from all corners of the globe, facilitating cross-cultural exchanges and collaborative learning experiences. This vibrant environment encourages students to engage with differing perspectives, preparing them to lead discussions around pressing global issues, such as climate change, economic inequality, and social justice.

Stanford’s international partnerships and initiatives further enhance its global reach. Collaborations with universities and research institutions worldwide facilitate knowledge-sharing and joint research ventures. The university’s various global centers promote intercultural understanding and provide students the opportunity to study abroad, immersing themselves in diverse cultures while addressing global challenges.

Additionally, Stanford’s alumni network boasts influential leaders who are effecting change within their respective fields. Notable figures include former U.S. Secretary of Defense Leon Panetta, Google co-founders Larry Page and Sergey Brin, and renowned authors and scholars. This extensive network not only connects graduates but also offers current students mentorship opportunities, fostering a sense of community and lifelong connections that span the globe.

Moreover, Stanford’s focus on entrepreneurship has positioned it as a beacon of innovation. The Stanford Venture Studio and other entrepreneurial programs support students in their endeavors to create startups and launch social enterprises. This focus on entrepreneurship not only fuels economic growth but also contributes to societal advancements, addressing challenges through creative solutions.

In recent years, Stanford has placed a significant emphasis on interdisciplinary education, recognizing that the complexity of global issues requires multifaceted approaches. The university encourages collaboration between different fields, allowing students to develop comprehensive strategies that leverage diverse skill sets, ultimately positioning them as future leaders capable of effecting meaningful change.

As the stakes for global leadership continue to rise, Stanford’s commitment to social responsibility remains evident. The university actively engages students in initiatives that promote civic responsibility and ethical leadership. Programs focused on social entrepreneurship empower students to apply their education to address pressing social issues, aligning their professional aspirations with the greater good.

In conclusion, Stanford University’s global influence is undeniable. Through its commitment to academic excellence, innovation, and social responsibility, the institution prepares students to become leaders who will shape the future. The diverse experiences, interdisciplinary approaches, and opportunities available at Stanford enable graduates to navigate an increasingly interconnected world with purpose and vision.

Conclusion

The history of Stanford University is a testament to the power of vision, innovation, and commitment to education. From its founding in 1885 by Leland and Jane Stanford, the institution has evolved into a global leader in academia and research. Each era of Stanford’s history reflects broader societal changes, embracing inclusion, diversity, and the values of its founding principles. Through its notable contributions to science, technology, and social responsibility, Stanford continues to shape the landscape of higher education and prepare future leaders for the complexities of an interconnected world. As it moves forward, the university’s enduring legacy is secured by its ongoing commitment to excellence, innovation, and making a positive impact on society.

Sources Consulted

- Stanford University Official Website: https://www.stanford.edu

- “A History of Stanford University” by Robert L. Diffenbaugh

- “The Birth of Stanford University” from Stanford Historical Society: http://www.stanfordhistoricalsociety.org

- “Stanford Medicine: A History” by Robert H. W. Welch

- Stanford Encyclopedia of Philosophy: [https://plato.stanford.edu]